|

Insights: Foundation Model Generalization

What Should "Generalization" Mean in EO?

In standard machine learning, generalization usually means: can this model make good predictions on data it hasn’t seen before?

In Earth observation, that question is far more complex.

Because “unseen data” doesn’t just mean random holdout pixels—it means:

🌍 𝗨𝗻𝘀𝗲𝗲𝗻 𝗿𝗲𝗴𝗶𝗼𝗻𝘀 (new countries, ecosystems, terrain)

🌤️ 𝗨𝗻𝘀𝗲𝗲𝗻 𝗰𝗼𝗻𝗱𝗶𝘁𝗶𝗼𝗻𝘀 (different seasons, phenological stages)

📅 𝗨𝗻𝘀𝗲𝗲𝗻 𝘁𝗶𝗺𝗲 𝗽𝗲𝗿𝗶𝗼𝗱𝘀 (climate extremes, future scenarios)

EO models need to generalize not just in a statistical sense, but across 𝘀𝗽𝗮𝗰𝗲 𝗮𝗻𝗱 𝘁𝗶𝗺𝗲.

That’s what makes foundation models so promising—and so hard to evaluate.

🚨 A model that performs well on a random test split may fail in:

- A wildfire event in a previously cool, wet region

- A flood zone with sparse cloud-free imagery

- An agricultural zone with different crop mixes or growing seasons

So what should real generalization look like?

It should mean:

- Learning patterns that hold across 𝗴𝗲𝗼𝗴𝗿𝗮𝗽𝗵𝘆 𝗮𝗻𝗱 𝘁𝗶𝗺𝗲, not just datasets

- Succeeding in 𝘂𝗻𝗱𝗲𝗿𝗿𝗲𝗽𝗿𝗲𝘀𝗲𝗻𝘁𝗲𝗱 𝗿𝗲𝗴𝗶𝗼𝗻𝘀, not just well-studied ones

- Anticipating 𝗻𝗼𝘃𝗲𝗹 𝗰𝗼𝗻𝗱𝗶𝘁𝗶𝗼𝗻𝘀, not just repeating past ones

That requires new evaluation strategies:

- Spatially disjoint test sets

- Temporally shifted scenarios

- Benchmarks that reflect real deployment settings, not just random splits

📌 𝗘𝗢 𝗶𝘀 𝗮 𝗱𝗼𝗺𝗮𝗶𝗻 𝘄𝗵𝗲𝗿𝗲 𝗴𝗲𝗻𝗲𝗿𝗮𝗹𝗶𝘇𝗮𝘁𝗶𝗼𝗻 𝗶𝘀𝗻’𝘁 𝗼𝗽𝘁𝗶𝗼𝗻𝗮𝗹—it’s the whole point.

The real test of a model isn’t how it performs on yesterday’s data—it’s whether it will work when the world changes tomorrow.

👇 𝗪𝗵𝗮𝘁 𝗱𝗼𝗲𝘀 𝗴𝗲𝗻𝗲𝗿𝗮𝗹𝗶𝘇𝗮𝘁𝗶𝗼𝗻 𝗺𝗲𝗮𝗻 𝘁𝗼 𝘆𝗼𝘂 𝗶𝗻 𝘁𝗵𝗲 𝗰𝗼𝗻𝘁𝗲𝘅𝘁 𝗼𝗳 𝗘𝗢?

𝗛𝗼𝘄 𝗱𝗼 𝘆𝗼𝘂 𝘁𝗲𝘀𝘁 𝘆𝗼𝘂𝗿 𝗺𝗼𝗱𝗲𝗹𝘀’ 𝗮𝗯𝗶𝗹𝗶𝘁𝘆 𝘁𝗼 𝗵𝗮𝗻𝗱𝗹𝗲 𝘁𝗵𝗲 𝘂𝗻𝗸𝗻𝗼𝘄𝗻?

Leave a comment |

|

|

|

|

|

|

Insights: Biomarker Development

𝗔 𝘀𝗶𝗻𝗴𝗹𝗲 𝘄𝗲𝗮𝗸 𝗯𝗶𝗼𝗺𝗮𝗿𝗸𝗲𝗿 𝗰𝗮𝗻 𝗾𝘂𝗶𝗲𝘁𝗹𝘆 𝗱𝗿𝗮𝗶𝗻 $𝟮𝟬–𝟯𝟬𝟬𝗠 𝗳𝗿𝗼𝗺 𝘆𝗼𝘂𝗿 𝗥&𝗗 𝗯𝘂𝗱𝗴𝗲𝘁.

I’ve seen it play out two very different ways.

𝗧𝗲𝗮𝗺 𝗔 celebrated their internal validation results — accuracy looked fantastic. But once trial sites broadened, performance plummeted. Regulators demanded new evidence. The fix? A year of re-validation, $𝟮𝟬𝗠+ in added costs, and a stalled program that let competitors pull ahead.

𝗧𝗲𝗮𝗺 𝗕 asked a tougher question upfront: 𝘄𝗶𝗹𝗹 𝘁𝗵𝗶𝘀 𝗯𝗶𝗼𝗺𝗮𝗿𝗸𝗲𝗿 𝗵𝗼𝗹𝗱 𝗮𝗰𝗿𝗼𝘀𝘀 𝗿𝗲𝗮𝗹-𝘄𝗼𝗿𝗹𝗱 𝘃𝗮𝗿𝗶𝗮𝗯𝗶𝗹𝗶𝘁𝘆? They caught staining shifts early, adjusted their pipeline, and advanced on schedule. Their regulatory review went smoothly. Their portfolio risk went down.

📊 𝗧𝗵𝗲 𝗥𝗢𝗜 𝗼𝗳 𝗲𝗮𝗿𝗹𝘆 𝗼𝘃𝗲𝗿𝘀𝗶𝗴𝗵𝘁 𝗶𝘀 𝗰𝗹𝗲𝗮𝗿 — 𝗲𝘃𝗲𝗻 𝘂𝘀𝗶𝗻𝗴 𝗰𝗼𝗻𝘀𝗲𝗿𝘃𝗮𝘁𝗶𝘃𝗲 𝗮𝘀𝘀𝘂𝗺𝗽𝘁𝗶𝗼𝗻𝘀:

- Average Phase 2 oncology trial costs $20–40M. A modest 5% reduction in failure risk = $1–2M safeguarded per trial.

- Average Phase 3 oncology trial costs $200–300M. The same 5% improvement = $10–15M safeguarded per trial.

- Re-validation costs ($10–20M+) are avoided if gaps are caught early.

- Delays of 6–12 months can mean $100–200M in lost revenue (IQVIA, 2021).

💡 𝗔𝗻𝗱 𝘁𝗵𝗮𝘁’𝘀 𝗷𝘂𝘀𝘁 𝘁𝗵𝗲 𝗰𝗼𝗻𝘀𝗲𝗿𝘃𝗮𝘁𝗶𝘃𝗲 𝗰𝗮𝘀𝗲. In practice, catching even one major robustness issue early can save months of rework and tens of millions in capital — while keeping programs on schedule and competitive.

👉 𝗪𝗵𝗲𝗿𝗲 𝗱𝗼 𝘆𝗼𝘂 𝘀𝗲𝗲 𝘁𝗵𝗲 𝗯𝗶𝗴𝗴𝗲𝘀𝘁 𝗳𝗿𝗮𝗴𝗶𝗹𝗶𝘁𝘆 𝗶𝗻 𝗯𝗶𝗼𝗺𝗮𝗿𝗸𝗲𝗿 𝗱𝗲𝘃𝗲𝗹𝗼𝗽𝗺𝗲𝗻𝘁 — 𝗱𝗮𝘁𝗮, 𝘃𝗮𝗿𝗶𝗮𝗯𝗶𝗹𝗶𝘁𝘆, 𝗼𝗿 𝘁𝗶𝗺𝗲𝗹𝗶𝗻𝗲𝘀?

Leave a comment |

|

|

|

|

|

|

Research: Grounding

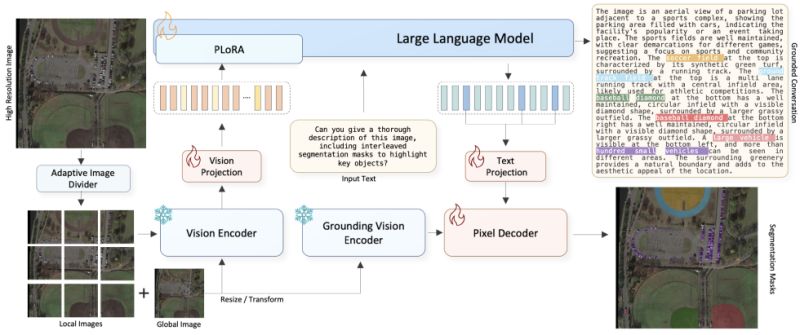

GeoPixel: Pixel Grounding Large Multimodal Model in Remote Sensing

While large multimodal models excel at understanding natural images, they struggle with satellite and aerial imagery. The unique overhead perspective, scale variation, and small objects in high-resolution remote sensing data present distinct challenges that current models can't handle effectively.

Akashah Shabbir et al. introduced GeoPixel, the first large multimodal model designed specifically for high-resolution remote sensing image analysis with precise pixel-level grounding capabilities - meaning it can identify exactly which pixels in an image correspond to objects it discusses.

𝗪𝗵𝘆 𝗥𝗲𝗺𝗼𝘁𝗲 𝗦𝗲𝗻𝘀𝗶𝗻𝗴 𝗶𝘀 𝗗𝗶𝗳𝗳𝗲𝗿𝗲𝗻𝘁:

Remote sensing imagery requires specialized understanding that general vision-language models lack:

• Overhead viewpoints create spatial relationships unlike natural photography

• Extreme scale variations - from individual vehicles to entire city blocks in one image

• Small objects distributed across vast areas require precise localization

• Limited training data with conversations where text references are linked to specific image regions

𝗧𝗵𝗲 𝗚𝗲𝗼𝗣𝗶𝘅𝗲𝗹 𝗔𝗽𝗽𝗿𝗼𝗮𝗰𝗵:

The system combines three key components to handle high-resolution imagery up to 4K:

• 𝗔𝗱𝗮𝗽𝘁𝗶𝘃𝗲 𝗶𝗺𝗮𝗴𝗲 𝗽𝗮𝗿𝘁𝗶𝘁𝗶𝗼𝗻𝗶𝗻𝗴: Divides images into local and global regions for efficient processing

• 𝗣𝗶𝘅𝗲𝗹-𝗹𝗲𝘃𝗲𝗹 𝗴𝗿𝗼𝘂𝗻𝗱𝗶𝗻𝗴: Generates precise segmentation masks that show exactly which pixels belong to each object mentioned

• 𝗜𝗻𝘁𝗲𝗿𝗹𝗲𝗮𝘃𝗲𝗱 𝗺𝗮𝘀𝗸 𝗴𝗲𝗻𝗲𝗿𝗮𝘁𝗶𝗼𝗻: Produces detailed responses with corresponding visual masks in conversation

𝗧𝗵𝗲 𝗚𝗲𝗼𝗣𝗶𝘅𝗲𝗹𝗗 𝗗𝗮𝘁𝗮𝘀𝗲𝘁:

To enable grounded conversations, the researchers created a specialized dataset using a multi-tier annotation strategy:

• 54k grounded phrases linked to 600k objects

• Descriptions averaging 740 characters with rich spatial context

• Hierarchical annotations from scene-level context to individual object details

• 5k validated referring expression-mask pairs for evaluation

𝗞𝗲𝘆 𝗔𝗽𝗽𝗹𝗶𝗰𝗮𝘁𝗶𝗼𝗻𝘀:

This capability enables more precise analysis for:

• Urban planning and infrastructure mapping

• Environmental monitoring and change detection

• Disaster response and damage assessment

• Agricultural monitoring and precision farming

• Defense and security applications

𝗜𝗺𝗽𝗮𝗰𝘁:

GeoPixel addresses a critical gap in AI applications for EO. By enabling natural language conversations about satellite imagery with pixel-accurate grounding, it could accelerate decision-making in fields from urban planning to climate research. The model and dataset are publicly available to advance the field.

|

|

|

|

|

|

|

Research: Geospatial FM for Ecology

SSL4Eco: A Global Seasonal Dataset for Geospatial Foundation Models in Ecology

What if current geospatial foundation models are missing critical ecological insights because they're trained on datasets biased toward cities and farms, while ignoring entire biomes like Arctic tundra and tropical rainforests?

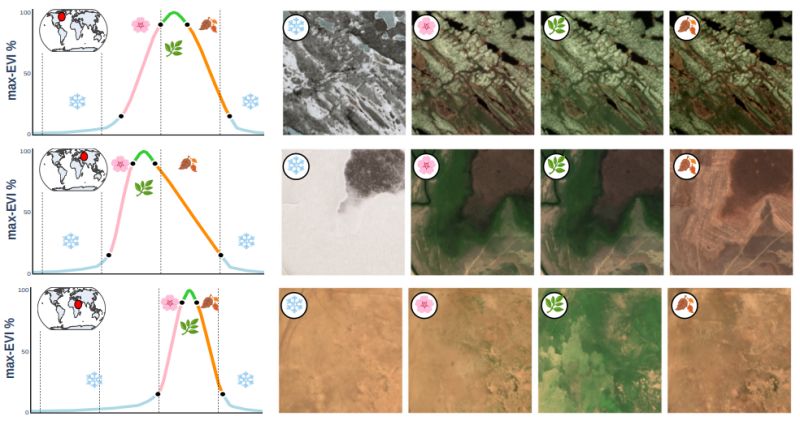

Elena Plekhanova et al. introduced SSL4Eco at the CVPR 2025 EarthVision Workshop - a global Sentinel-2 dataset that prioritizes ecological diversity over human activity, using local vegetation cycles rather than calendar seasons to capture true environmental patterns.

𝐓𝐡𝐞 𝐞𝐜𝐨𝐥𝐨𝐠𝐢𝐜𝐚𝐥 𝐝𝐚𝐭𝐚 𝐛𝐢𝐚𝐬: Most geospatial foundation models are trained on datasets that sample around large cities, overrepresenting urban and agricultural areas while neglecting tropical rainforests (Earth's most biodiverse regions) and Arctic tundra (critical for climate models). Additionally, existing multi-temporal datasets use calendar seasons that ignore local phenological cycles - the reality that vegetation activity varies dramatically based on latitude, altitude, and local climate.

𝐊𝐞𝐲 𝐢𝐧𝐧𝐨𝐯𝐚𝐭𝐢𝐨𝐧𝐬:

∙ 𝐔𝐧𝐢𝐟𝐨𝐫𝐦 𝐠𝐥𝐨𝐛𝐚𝐥 𝐬𝐚𝐦𝐩𝐥𝐢𝐧𝐠: 250,000 locations distributed across the entire landmass using a 23 km regular grid, capturing all biomes without urban bias

∙ 𝐄𝐕𝐈-𝐛𝐚𝐬𝐞𝐝 𝐬𝐞𝐚𝐬𝐨𝐧𝐚𝐥 𝐬𝐚𝐦𝐩𝐥𝐢𝐧𝐠: Four dates per location based on local vegetation phenology using Enhanced Vegetation Index instead of calendar dates

∙ 𝐄𝐜𝐨𝐥𝐨𝐠𝐢𝐜𝐚𝐥 𝐞𝐯𝐚𝐥𝐮𝐚𝐭𝐢𝐨𝐧 𝐭𝐚𝐬𝐤𝐬: New benchmarks for biome classification, Arctic vegetation mapping, plant species prediction, and biomass estimation

∙ 𝐒𝐞𝐂𝐨-𝐄𝐜𝐨 𝐦𝐨𝐝𝐞𝐥: Foundation model trained with seasonal contrastive learning to capture both season-invariant and season-specific features

𝐖𝐡𝐲 𝐭𝐡𝐢𝐬 𝐦𝐚𝐭𝐭𝐞𝐫𝐬: SeCo-Eco achieved state-of-the-art performance on 7 out of 8 downstream ecological tasks, with particularly strong improvements in biomass estimation and climate variable regression. More importantly, this work demonstrates that simple changes in dataset construction can significantly improve AI model performance for ecological applications - crucial as we face an unprecedented biodiversity crisis.

The research highlights how thoughtful data curation, rather than just algorithmic innovation, can advance AI for environmental monitoring and conservation planning.

|

|

|

|

|

|

|

Enjoy this newsletter? Here are more things you might find helpful:

Office Hours -- Are you a student or young professional with questions about machine learning for pathology or remote sensing? Do you need career advice? Once a month, I'm available to chat about your research, industry trends, career opportunities, or other topics.

Register for the next session |

|

|

|

Did someone forward this email to you, and you want to sign up for more? Subscribe to future emails

This email was sent to _t.e.s.t_@example.com. Want to change to a different address? Update subscription

Want to get off this list? Unsubscribe

My postal address: Pixel Scientia Labs, LLC, PO Box 98412, Raleigh, NC 27624, United States |

|

|

|

|