The Hidden Costs of Distribution Shift

Most vision AI models don’t fail because the algorithms are weak — they fail when the data changes. Distribution shift is the unseen cliff where lab-ready models fall apart in the real world.

It’s the silent killer of promising pilots.

What Distribution Shift Looks Like

Pathology: A model trained on one lab’s stain colors collapses when tested on another lab’s slides.

Forestry: A model trained to detect deforestation in the Amazon struggles when applied in boreal forests — because tree species, canopy structure, and seasonal signals look entirely different.

Agriculture: A pest detection model trained on one variety of wheat misclassifies disease signs on another variety with different leaf color or texture.

Across every domain, the pattern is the same: models that look bulletproof in development crumble when faced with new, unseen conditions.

Why Leaders Miss It

Metrics during development often look great, creating false confidence. For example, a model that shows 95% accuracy in-house can plummet to 60% when tested on external data. Validation usually happens on “friendly” data — the same lab, same region, same sensor. Stakeholders mistake good pilots for readiness, overlooking how fragile the model really is.

Distribution shift is invisible until it’s too late — unless you test for it deliberately.

The Real Costs

And when distribution shift strikes, the fallout is more than technical. The cost isn’t just lower accuracy — it’s:

Re-validation costs: every new site, lab, or sensor can mean repeating the entire validation process, at a price of hundreds of thousands to millions.

Delays to market: 6–12 months of lost momentum can stall funding rounds, regulatory approvals, or adoption. In pharma or agtech, a six‑month delay can mean $100M+ in lost market share or trial recruitment.

Lost confidence: Teams and investors lose trust when a "working" model fails in deployment.

And these costs don’t appear in isolation. Re-validation expenses combine with delays and lost trust, compounding into missed funding, lost partnerships, and shrinking market opportunities.

The real damage isn’t the error rate. It’s the wasted time, rework, and cascading opportunity loss.

How to Get Ahead of It

Stress-test early: Validate on diverse sites, regions, or sensors from the beginning — for example, across multiple hospitals or forest types.

Anticipate variability: Build data pipelines that capture seasonal, geographic, and device-level differences — such as crop cycles, soil types, or imaging devices.

Involve domain experts: They know where shifts are most likely to occur and how they’ll impact decisions — like pathologists recognizing staining variations or agronomists identifying crop differences.

That’s why proactive oversight matters. This is where I help teams — identifying risks early and designing validation that mirrors the real world.

Closing Thought

The hidden cost of distribution shift isn’t lower accuracy — it’s lost time, lost trust, and lost opportunity.

After 20 years in computer vision, I know where these risks hide. If you want to uncover where distribution shift could derail your vision AI project before it becomes costly, let’s talk. Book a Pixel Clarity Call and we’ll map your risks and next steps together.

- Heather

|

|

|

Closing the Last-Mile Gap in Vision AI for People, Planet, and Science

|

|

|

|

|

|

|

Research: Fine-tuning Foundation Models

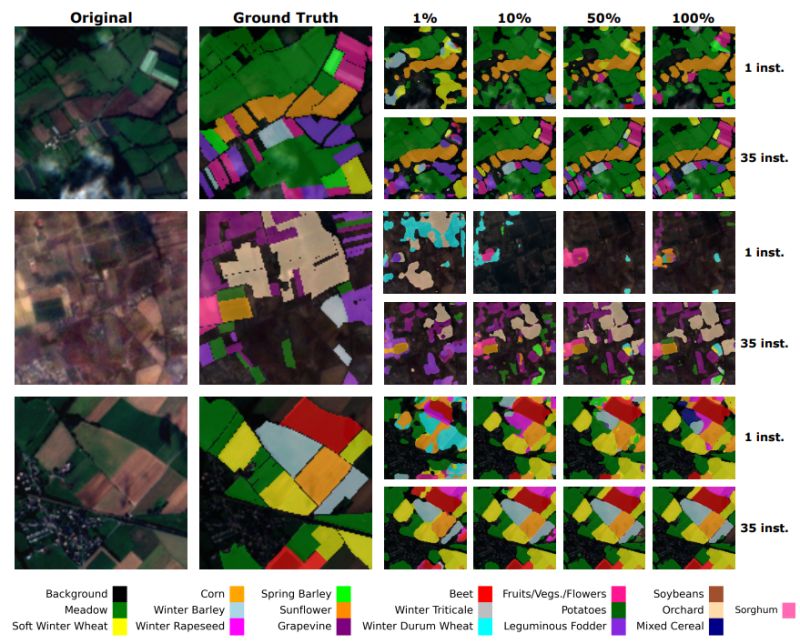

Shaping Fine-Tuning of Geospatial Foundation Models: Effects of Label Availability and Temporal Resolution

Foundation models are transforming Earth observation, but we're still figuring out how to fine-tune them effectively. Giovanni Castiglioni et al. delivered crucial insights that challenge conventional wisdom about transfer learning in satellite imagery.

𝗧𝗵𝗲 𝗰𝗿𝗶𝘁𝗶𝗰𝗮𝗹 𝗾𝘂𝗲𝘀𝘁𝗶𝗼𝗻: How should we adapt these powerful pre-trained models when we have limited labeled satellite data or short temporal sequences? The wrong fine-tuning strategies can waste expensive compute and miss critical agricultural or environmental

patterns.

𝗦𝘂𝗿𝗽𝗿𝗶𝘀𝗶𝗻𝗴 𝗸𝗲𝘆 𝗳𝗶𝗻𝗱𝗶𝗻𝗴𝘀:

• 𝗖𝗼𝘂𝗻𝘁𝗲𝗿𝗶𝗻𝘁𝘂𝗶𝘁𝗶𝘃𝗲 𝗹𝗲𝗮𝗿𝗻𝗶𝗻𝗴 𝗿𝗮𝘁𝗲𝘀: Using a smaller learning rate for the pre-trained encoders improves performance in moderate and high data regimes

• 𝗟𝗼𝘄-𝗱𝗮𝘁𝗮 𝗽𝗮𝗿𝗮𝗱𝗼𝘅: Full fine-tuning outperforms partial fine-tuning in very low-label settings — the opposite of what transfer learning theory suggests

• 𝗧𝗲𝗺𝗽𝗼𝗿𝗮𝗹

𝗰𝗼𝗻𝘁𝗲𝘅𝘁 𝗺𝗮𝘁𝘁𝗲𝗿𝘀: Performance plateaus with more time series data, with the biggest gains in label-scarce scenarios

• 𝗙𝗼𝘂𝗻𝗱𝗮𝘁𝗶𝗼𝗻 𝗺𝗼𝗱𝗲𝗹𝘀 𝗰𝗮𝗻 𝗰𝗼𝗺𝗽𝗲𝘁𝗲: Even with abundant labels, properly fine-tuned geospatial foundation models matched specialized task-specific models

𝗧𝗵𝗲 𝗯𝗶𝗴 𝘁𝗮𝗸𝗲𝗮𝘄𝗮𝘆: This behavior suggests a nuanced trade-off between feature reuse and adaptation that defies the intuition of standard transfer learning. The optimal fine-tuning strategy depends heavily on your data

availability.

𝗣𝗿𝗮𝗰𝘁𝗶𝗰𝗮𝗹 𝗴𝘂𝗶𝗱𝗮𝗻𝗰𝗲 𝗳𝗿𝗼𝗺 𝘁𝗵𝗲 𝗿𝗲𝘀𝗲𝗮𝗿𝗰𝗵: For large GFMs, tune learning rate as a key hyperparameter, as no guarantee of alignment of the pretraining and downstream tasks was observed in their experiments. For experiments with abundant labels, fully-supervised models with less computational cost were competitive or superior to larger pretrained models, so consider them as a relevant alternative. Leverage long temporal sequences, particularly when supervision is limited, because even frozen GFMs substantially benefited from richer temporal context.

𝗧𝗵𝗿𝗲𝗲 𝗮𝗰𝘁𝗶𝗼𝗻𝗮𝗯𝗹𝗲

𝗿𝗲𝗰𝗼𝗺𝗺𝗲𝗻𝗱𝗮𝘁𝗶𝗼𝗻𝘀:

- Treat learning rate scaling as a critical hyperparameter, not a fixed choice

- Consider simpler supervised models when you have abundant labels

- Maximize temporal sequences in your data, especially with limited supervision

|

|

|

|

|

|

Research: Data-Centric AI

Less is More? Data Specialization for Self-Supervised Remote Sensing Models

What if removing 98.5% of your training data actually improved your model's performance? Alvard Barseghyan et al. explored whether "more data is better" always holds true in remote sensing foundation models.

𝗧𝗵𝗲 𝗰𝗼𝗻𝘃𝗲𝗻𝘁𝗶𝗼𝗻𝗮𝗹 𝗮𝗽𝗽𝗿𝗼𝗮𝗰𝗵: Data curation typically focuses on reducing computational cost while maintaining quality. Bigger datasets are generally assumed to yield better models, even if some data is redundant.

𝗪𝗵𝘆 𝘁𝗵𝗶𝘀 𝗺𝗮𝘁𝘁𝗲𝗿𝘀:

Annotating aerial imagery is complex and labor-intensive, making self-supervised pretraining important. Understanding when data reduction might help performance could inform more efficient training approaches.

𝗧𝗵𝗲 𝗿𝗲𝘀𝗲𝗮𝗿𝗰𝗵 𝗮𝗽𝗽𝗿𝗼𝗮𝗰𝗵: The team tested whether reducing dataset size can improve model quality under fixed compute budgets. They applied two pruning techniques—SemDeDup and hierarchical clustering—to Million-AID and Maxar datasets, training iBOT models with constant computational resources.

𝗞𝗲𝘆 𝗳𝗶𝗻𝗱𝗶𝗻𝗴𝘀:

- Dataset-dependent results: The impact of data specialization techniques varies significantly across datasets

- Significant improvements in one case: Filtering by hierarchical clustering improves the

transfer of Maxar pretraining by 3 percentage points while removing 98.5% of the dataset

- Limited applicability: Neither of the filtering methods improve the transfer of Million-AID pretraining

- The distractor hypothesis: Large clusters in datasets may contain redundant or low-value images that negatively impact downstream performance

𝗞𝗲𝘆 𝗰𝗵𝗮𝗹𝗹𝗲𝗻𝗴𝗲: Is it possible to identify distracting images in large datasets without extensive pretraining experiments? Current methods require expensive trial-and-error approaches to determine which data helps or hurts performance.

𝗣𝗿𝗮𝗰𝘁𝗶𝗰𝗮𝗹 𝗰𝗼𝗻𝘀𝗶𝗱𝗲𝗿𝗮𝘁𝗶𝗼𝗻: Before assuming more data is better, it may be worth investigating whether your dataset

contains distractors. Hierarchical clustering could help determine if aggressive pruning might improve performance.

Have you observed similar patterns in your remote sensing datasets? What approaches do you use to assess data quality?

Leave a comment

|

|

|

|

|

|

|

Insights: Last-Mile AI Gap

The fastest way to burn runway in vision AI isn't salaries. It's the last mile.

I’ve seen startups spend 6–12 months building models that look bulletproof in the lab.

Metrics climb. Confidence soars.

Then the last-mile AI gap appears:

- Robustness → collapsing under new devices, environments, or conditions

- Trust → confidence breaking when outputs can’t be validated

- Adoption → stalling because results don’t drive real decisions

By then, the costs are enormous:

- Hundreds of thousands in salaries already spent

- Another $500K–$1M in rework

- A funding round delayed—or credibility lost with investors

That’s why I tell founders: my services aren’t a cost. They’re insurance.

You don’t buy insurance after the crash—you buy it to avoid the

wreck.

The last-mile AI gap is where promising CV prototypes stall.

The question is: will you uncover those risks when it’s cheap—or when it’s catastrophic?

👉 What’s the most expensive last-mile mistake you’ve seen in AI?

Leave a comment

|

|

|

|

|

|

Enjoy this newsletter? Here are more things you might find helpful:

Pixel Clarity Call - A free 30-minute conversation to cut through the noise and see where your vision AI project really stands. We’ll pinpoint vulnerabilities, clarify your biggest challenges, and decide if an assessment or diagnostic could save you time, money, and credibility.

Book now

|

|

|

Did someone forward this email to you, and you want to sign up for more? Subscribe to future emails

This email was sent to _t.e.s.t_@example.com. Want to change to a different address? Update subscription

Want to get off this list? Unsubscribe

My postal address: Pixel Scientia Labs, LLC, PO Box 98412, Raleigh, NC 27624, United States

|

|

|

|

|