|

Insights: The Last-Mile AI Gap

💥 𝗪𝗵𝗮𝘁’𝘀 𝘁𝗵𝗲 𝗺𝗼𝘀𝘁 𝗲𝘅𝗽𝗲𝗻𝘀𝗶𝘃𝗲 𝗺𝗶𝘀𝘁𝗮𝗸𝗲 𝗶𝗻 𝗔𝗜-𝗱𝗿𝗶𝘃𝗲𝗻 𝗱𝗿𝘂𝗴 𝗱𝗲𝘃𝗲𝗹𝗼𝗽𝗺𝗲𝗻𝘁?

𝗜𝘁 𝗶𝘀𝗻’𝘁 𝗯𝘂𝗶𝗹𝗱𝗶𝗻𝗴 𝘁𝗵𝗲 𝗺𝗼𝗱𝗲𝗹.

𝗜𝘁’𝘀

𝗱𝗶𝘀𝗰𝗼𝘃𝗲𝗿𝗶𝗻𝗴 — 𝘁𝗼𝗼 𝗹𝗮𝘁𝗲 — 𝘁𝗵𝗮𝘁 𝗶𝘁 𝗱𝗼𝗲𝘀𝗻’𝘁 𝘄𝗼𝗿𝗸 𝗼𝘂𝘁𝘀𝗶𝗱𝗲 𝘆𝗼𝘂𝗿 𝗱𝗮𝘁𝗮𝘀𝗲𝘁.

A pharma team I spoke with had a promising pathology AI biomarker for immunotherapy response. On their internal slides, it looked bulletproof. Confidence was sky-high.

But when external trial sites joined, performance collapsed. Overnight, accuracy dropped by nearly half.

𝗪𝗵𝘆?

◉ A different scanner

◉ A slightly altered staining protocol

◉ A more diverse patient population

The science hadn’t failed.

𝗩𝗮𝗿𝗶𝗮𝗯𝗶𝗹𝗶𝘁𝘆 𝗵𝗮𝗱.

👉 Have you seen this yourself — a model that worked beautifully in-house but stumbled in validation?

📊 𝗧𝗵𝗲 𝘀𝘁𝗮𝗸𝗲𝘀:

- Phase 2 trial failure = $20–40M lost (DiMasi et al., 2016)

- Phase 3 trial failure = $200–300M gone (BIO, 2018)

- Oncology Phase 3 success rates are only 30–40%

- 6–12 month delay = $100–200M in lost revenue (IQVIA, 2021)

This is the last-mile AI gap — the point between discovery and deployment where promising biomarkers fall apart under real-world variability.

💡 Robustness and data gaps must be identified early, when fixes are cheap — not discovered late, when costs explode.

That’s why I focus on helping teams surface these gaps before trials are at

risk.

👉 Curious how others are tackling this? DM me — I’m happy to share a guide on early robustness checks in pathology AI.

Leave a comment

|

|

|

|

|

|

|

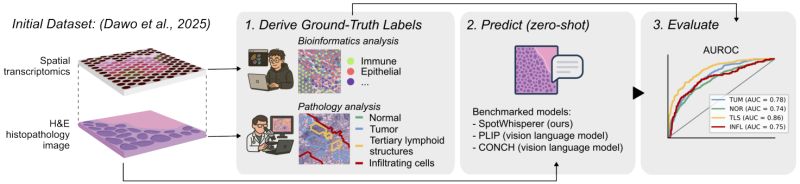

Research: Linking Histology and Gene Expression

Molecularly informed analysis of histopathology images using natural language

What if pathologists could explore gene expression patterns in tissue images using simple text queries? Moritz Schaefer et al. introduce SpotWhisperer, an AI method that connects the visual and molecular worlds of cancer diagnosis.

𝗧𝗵𝗲 𝗰𝘂𝗿𝗿𝗲𝗻𝘁 𝗹𝗶𝗺𝗶𝘁𝗮𝘁𝗶𝗼𝗻: Histopathology refers to the microscopic examination of diseased tissues and routinely guides treatment decisions for cancer and other diseases. Currently, this analysis focuses on morphological features but rarely considers gene expression information, which can add an important molecular

dimension.

𝗪𝗵𝘆 𝘁𝗵𝗶𝘀 𝗺𝗮𝘁𝘁𝗲𝗿𝘀: Traditional pathology relies on visual examination of tissue structure, but molecular information like gene expression patterns can reveal critical insights about disease mechanisms, treatment responses, and prognosis. The challenge has been integrating these two data types in a practical, accessible way.

𝗧𝗵𝗲 𝗦𝗽𝗼𝘁𝗪𝗵𝗶𝘀𝗽𝗲𝗿𝗲𝗿 𝗮𝗽𝗽𝗿𝗼𝗮𝗰𝗵: SpotWhisperer, an AI method that links histopathological images to spatial gene expression profiles and their text annotations, enabling molecularly grounded histopathology analysis through natural language.

𝗞𝗲𝘆

𝗶𝗻𝗻𝗼𝘃𝗮𝘁𝗶𝗼𝗻𝘀:

• 𝗡𝗮𝘁𝘂𝗿𝗮𝗹 𝗹𝗮𝗻𝗴𝘂𝗮𝗴𝗲 𝗶𝗻𝘁𝗲𝗿𝗳𝗮𝗰𝗲: Pathologists can query tissue images using free-text to explore cell types and disease mechanisms

• 𝗦𝗽𝗮𝘁𝗶𝗮𝗹 𝗴𝗲𝗻𝗲 𝗲𝘅𝗽𝗿𝗲𝘀𝘀𝗶𝗼𝗻 𝗶𝗻𝗳𝗲𝗿𝗲𝗻𝗰𝗲: The method predicts molecular patterns directly from standard H&E-stained slides

• 𝗦𝘂𝗽𝗲𝗿𝗶𝗼𝗿 𝗽𝗲𝗿𝗳𝗼𝗿𝗺𝗮𝗻𝗰𝗲: Their

method outperforms pathology vision-language models on a newly curated benchmark dataset dedicated to spatially resolved H&E annotation

• 𝗜𝗻𝘁𝗲𝗿𝗮𝗰𝘁𝗶𝘃𝗲 𝗲𝘅𝗽𝗹𝗼𝗿𝗮𝘁𝗶𝗼𝗻: Integrated into a web interface, SpotWhisperer enables interactive exploration of cell types and disease mechanisms using free-text queries with access to inferred spatial gene expression profiles

𝗣𝗿𝗮𝗰𝘁𝗶𝗰𝗮𝗹 𝗶𝗺𝗽𝗮𝗰𝘁: This approach could democratize access to molecular insights by making them available from routine pathology slides, without requiring expensive additional testing. Pathologists could ask questions like "Show me areas with high immune cell activity" or "Where are the proliferating cancer

cells?" and get both visual and molecular answers.

The work represents a step toward making molecular pathology more accessible and intuitive, potentially transforming how we analyze tissue samples in clinical practice.

|

|

|

|

|

|

|

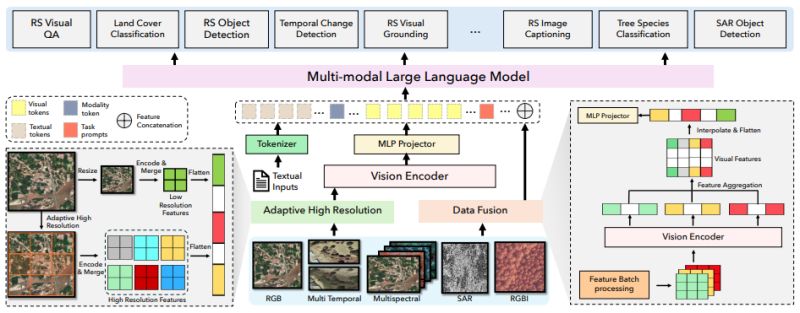

Research: Vision-Language Model for EO

EarthDial: Turning Multi-sensory Earth Observations to Interactive Dialogues

Imagine asking an AI to analyze satellite imagery in plain English: "Are there any methane plumes in this hyperspectral image?" or "What changes occurred between these two radar images after the disaster?"

Sagar Soni et al. just introduced EarthDial at CVPR 2025 - the first conversational vision-language model specifically designed for Earth observation data. Unlike generic AI models that struggle with remote sensing imagery, EarthDial can understand and analyze multi-spectral, multi-temporal, and multi-resolution satellite data through natural language conversations.

𝐖𝐡𝐲 𝐭𝐡𝐢𝐬 𝐦𝐚𝐭𝐭𝐞𝐫𝐬: Current AI models like GPT-4V perform

poorly on remote sensing tasks, achieving low accuracies on domain-specific data. Meanwhile, the growing volume of Earth observation data from satellites requires specialized tools that can handle the unique spectral, temporal, and geospatial complexities of this data.

EarthDial addresses this gap by supporting diverse sensor modalities (RGB, SAR, multispectral, infrared) and enabling applications from disaster response to precision agriculture. The model was trained on an extensive dataset of over 11 million instruction pairs and demonstrates superior performance across 44 downstream tasks including change detection, object identification, and environmental monitoring.

This represents a significant step toward making Earth observation data more accessible to domain experts who may not have deep technical backgrounds in remote sensing or computer vision.

|

|

|

|

|

|

|

Insights: Domain Expertise

Prediction Without Reasoning Isn't Diagnosis

Models make predictions. Pathologists make decisions.

𝐖𝐡𝐚𝐭 𝐏𝐚𝐭𝐡𝐨𝐥𝐨𝐠𝐢𝐬𝐭𝐬 𝐒𝐞𝐞 𝐓𝐡𝐚𝐭 𝐀𝐈 𝐒𝐭𝐢𝐥𝐥 𝐌𝐢𝐬𝐬𝐞𝐬

Even high-performing pathology models often fail to reflect how real diagnoses happen in the clinic.

Pathologists draw from a broad set of inputs:

- Reviewing clinical history

- Comparing prior slides and context

- Assessing spatial patterns across the specimen

- Recognizing rare presentations from experience

But many current

models still lack key ingredients for clinical relevance.

Most pathology AI models:

- Focus on narrow patch-level predictions

- Lack spatial continuity or contextual awareness

- Don’t integrate non-image clinical data

- Miss higher-order patterns like lesion symmetry or progression across sections

- Fail to consider contextual clues like adjacent tissue types

𝐂𝐥𝐢𝐧𝐢𝐜𝐚𝐥 𝐑𝐞𝐚𝐥𝐢𝐭𝐲: 𝐂𝐨𝐧𝐭𝐞𝐱𝐭 𝐂𝐨𝐦𝐞𝐬 𝐅𝐢𝐫𝐬𝐭

🧪 In prostate biopsies, a pathologist doesn't rely on one core alone—they scan across multiple slides to assess the distribution and extent of disease. A model that analyzes each slide in isolation may miss this broader pattern, leading to underestimation or

misclassification.

🧪 In breast pathology, a diagnosis of DCIS vs. invasive carcinoma may depend on identifying subtle invasion across multiple tissue sections—something current patch-based models often overlook.

🧠 Diagnosis is not just a visual task. It’s a form of clinical reasoning that connects patient context, morphology, and interpretive judgment.

When model outputs don’t align with clinical reasoning, they’re less likely to be trusted, adopted, or used in decisions that affect patient care.

That gap between prediction and reasoning explains why even high-performing models often go unused. If your model doesn’t reflect how pathologists actually think, it won’t earn their trust.

The next generation of models will need to reason across slides, integrate context, and reflect the pathologist’s mindset—not just mimic labels.

𝐓𝐚𝐤𝐞𝐚𝐰𝐚𝐲: If

your goal is clinical trust, your model must go beyond classification—toward reasoning that mirrors the pathologist’s thought process.

💡 Developer test: Ask a pathologist: “Would this output help or hinder your decision?” Use the answer to validate whether your model supports clinical reasoning—not just classification.

📣 𝑊ℎ𝑎𝑡 𝑑𝑜𝑒𝑠 𝑦𝑜𝑢𝑟 𝑚𝑜𝑑𝑒𝑙 𝑚𝑖𝑠𝑠 𝑡ℎ𝑎𝑡 𝑎 𝑔𝑜𝑜𝑑 𝑝𝑎𝑡ℎ𝑜𝑙𝑜𝑔𝑖𝑠𝑡 𝑛𝑒𝑣𝑒𝑟 𝑤𝑜𝑢𝑙𝑑?

Leave a comment

|

|

|

|

|

|

Enjoy this newsletter? Here are more things you might find helpful:

1 Hour Strategy Session -- What if you could talk to an expert quickly? Are you facing a specific machine learning challenge? Do you have a pressing question? Schedule a 1 Hour Strategy Session now. Ask me anything about whatever challenges you’re facing. I’ll give you no-nonsense advice that you can put into action immediately.

Schedule now

|

|

|

Did someone forward this email to you, and you want to sign up for more? Subscribe to future emails

This email was sent to _t.e.s.t_@example.com. Want to change to a different address? Update subscription

Want to get off this list? Unsubscribe

My postal address: Pixel Scientia Labs, LLC, PO Box 98412, Raleigh, NC 27624, United States

|

|

|

|

|